Premature Abstraction

Abstractions are a powerful way of hiding complexity. They can make code easier to test, extend, understand, and reason about. However, improper use of abstraction has precisely the opposite effect. To illustrate, check out this FizzBuzz Enterprise Edition repo which provides a comical example of over abstraction. It shows just how complex we can make an inherently simple problem by over engineering it. While that codebase is meant in satire, I’ve seen codebases that are almost on a par with it.

Yagni

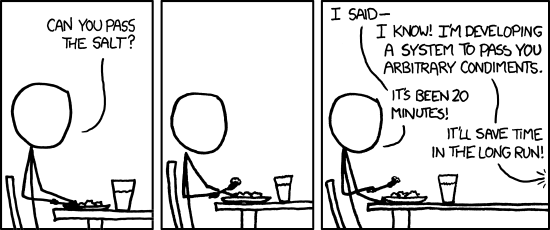

Over abstraction is often the result of attempting to predict future requirements. The purist in us wants to create something that will handle all of these hypothetical requirements, with the good and honest intention of making life easier for our future selves. But to avoid unnecessary complexity we must be pragmatic in our approach. Unless something is a requirement now, don’t implement it. This concept is called Yagni - “you aren’t gonna need it”. While Yagni is concerned with prematurely implementing distant or unspecified requirements, it applies to abstraction too. There is always a cost associated with premature abstraction. To see why, just consider the possible outcomes:

Case 1 - The extensible code is never extended

If your super extensible code is never actually extended (think of a strategy pattern with only one strategy) then the effort was wasted and your codebase now needlessly carries the additional complexity. In this scenario you take on the cost of build and the cost of carry.

Case 2 - The extensible code is eventually extended

Even if the code is eventually extended you still have the cost of carry for some time. Further, the effort spent for delayed value could’ve been spent on other work that would’ve been immediately valuable (cost of delay). Note that the abstraction actually has negative value until it becomes useful - at which point you could’ve just implemented it anyway.

Case 3 - You choose the wrong abstraction

In the worst case scenario you choose the wrong abstraction. This can happen when your understanding of the problem domain is insufficient. Further down the line, when requirements change or your understanding of the problem improves, you may find that you have multiple subclasses depending on the bad abstraction. This has the cost of carry and requires you to untangle and re-implement the existing code (cost of repair).

This is not to say that you should only think short term. Rather you should see the future for what it is: uncertain. Wherever possible favour simple design over complexity.

Rule of Three

So when is the right time to introduce an abstraction? As explained, it is often insufficient understanding of a problem that leads to bad abstraction. To combat this, we follow the Rule of Three. This rule of thumb states that we should allow duplication three times before we refactor. The underlying premise is that by increasing our sample size we make it easier to observe the patterns between use cases, thereby reducing the risk of incorrectly refactoring. Furthermore, the cost of carry for two pieces of similar code is generally a lot lower than the cost of repair that comes with the wrong abstraction.

If you really want to save your future self time and effort, think of Yagni and the Rule of Three.